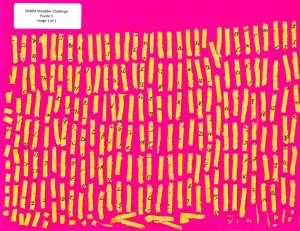

The DARPA Shredder Challenge from the point of view of Goldsong – 10th place. Would you have been able to solve the “easy” portion of the challenge? Find out answering the questions in red.

The DARPA Shredder challenge

The Defense Advanced Research Project Agency – DARPA – was interested in understanding how people solve complex puzzles. They issued the DARPA Shredder Challenge, a contest to put together in 4 weeks, from Oct. 27 to Dec 2, 2011, five shredded documents, each one progressively more difficult than the previous one.

The chads obtained after shredding the documents were arranged and photographed. The contestants got these images and had to extract the chads from the images, orient them correctly and then rearrange them until the reconstructed portion of the original document was sufficient to answer a question (or questions) associated with the document. The contestants would get points only for answering the questions, not for assembling the document.

There were 9000 entries, 69 of which scored at least 1 of 50 possible points. I participated using the name Goldsong and finished in the 10-th place. This is a summary of my experience in the contest.

Puzzle 1

Puzzle 1

Like many other contestants, I found about the challenge in slashdot.com, after it had already started and when many people had already solved this first puzzle. This puzzle had about 200 chads, i.e., it was a warm-up.

With a late start and a simple puzzle, I had no qualms about doing it by hand, in the most unsophisticated way possible: I cut the chads from the tiff images in which they came and pasted them in Keynote, the Apple version of Power Point.

In hindsight, my selection of tools was poor: keynote is a good presentation tool but it is no drawing program and thus, using it as a canvas to assemble the chads was inefficient. It took me about 20 hrs to assemble enough chads to be able to read the message of the page, but a lot of this time was spent fiddling with keynote instead of assembling the puzzle.

The puzzles only needed to be completed to the point at which one could answer a question associated with it, and to explain how you figured out the answer. Personally, I found the questions to have a difficulty similar to that of solving the puzzles. For this puzzle, the question was What is the appropriate title being referenced?

Well… we can see the solved puzzle by clicking on the image. What would have been your answer? We’ll discuss the results at the end of the article.

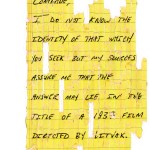

Puzzle 2

Puzzle 2

The second puzzle had about 350 chads but unlike the first one, it had been written in lined-paper, with two ink colors and had a stain from a coffee mug, i.e., a lot of hints. Hence, it looked also pretty easy to do by hand. The time will come when I would need to put together a computational tool to help me assemble the puzzle but this was not it.

I continued using Keynote. Clearly it was not the best tool for this task: I could not zoom-in-and-out of the canvas and, since the canvas was limited in size, I had to use multiple partially redundant canvases. Still, I had gotten better at using it so I spent less time pulling pieces into Keynote and more time pondering how to connect them.

There were many ways to approach this puzzle: assemble the coffee mug stain, assemble the borders, assemble the chads with red ink, etc. The beautiful part is that all of these approaches worked equally well. In the end, it took me 20 hrs to put it together. Indeed, a computational tool was not needed for this puzzle either.

The question for this puzzle was What is the deciphered message?

Not so easy, right? Give it a try. Remember that assembling the puzzle gave you no points; only answering the question gave you points.

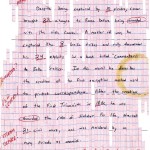

Puzzle 3

Puzzle 3

Puzzles 1 and 2 did not need a computational tool to solve them. Puzzles 4 and 5 definitely were going to need one. Puzzle 3 was an unknown. Should I solve it by hand or should I start to write the tool? Well… there was a pattern that appeared useful.

Puzzle 1 had 150 useful chads and it me took about 10 hrs to solve it (or at least it should have if I had gotten used to Keynote from the beginning). Puzzle 2 had 300 useful chads and took about 20 hrs. Puzzle 3 had about 600 useful chads. It appeared that the puzzles had been selected so that each one was twice as hard to solve as the previous one. If that was the case, puzzle 3 should take 40 hrs to solve by hand, pretty doable. Thus, I had my answer.. and Boy, was I wrong!

In the beginning it looked as if assembling the puzzle by hand had been the right choice. After 40 hrs I had assembled about 80% of the puzzle. However, I had no idea how to answer the questions associated with the puzzle. And each time it was more difficult to put pieces together.

Unlike puzzles 1 and 2, the message in this puzzle was a drawing, not a text. We can narrow possible characters that follow a partial word but we cannot do this with a drawing. Likewise, the drawing used only one ink color, not two, and was drawn on white paper, not lined one. Still, the worst part is that for longest time I could not figured out what it was a drawing of.

In the end it took me about 100 hrs to assemble enough pieces to answer the two questions of this puzzle: What is the indicated country?

(easy) and What is the indicated city/town?

(hard). Can you answer them out from the partially assembled puzzle?

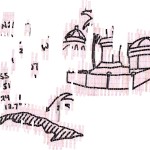

Puzzle 4

Puzzle 4

It did not look sensible to attack puzzle 4 by hand: too many chads, about 2300, most of them useful, and very few constraints. However, there were a few chads written in a distinctive ink color, hand writing or orientation that stood out. Unbeknownst to the contestants, these few chads contained the answers to the question associated with the puzzle: What are the name and/or initials of the collaborators?

Presumably, the “collaborators” were the people that had written the message of the puzzle. We did not know how many they were. I put together a few of these distinctive chads and got ‘Dave’ and ‘George’ in a matter of a few hours. I also found a 3rd collaborator but I did not realize it, so I did not submit it as an answer and did not get credit for it. Can you spot it?

Likewise, I did not realize that all the answers stood out from the general content of the puzzle. Hence, I did not try to assemble the words with distinctive hand writing or orientation. Instead I started to work on the computational tool that seemed the only viable direction to solve puzzle 4. Big mistake! Although it was not possible to assemble the puzzle without the tool, it was completely feasible to answer the questions without assembling all the puzzle, using a manual method.

At this point in time I was 5th in the leaderboard. Unfortunately, life caught up with me, friends from San Francisco arrived to spend the Thanksgiving weekend with us and the hours that I had available to put in the challenge dwindled to nothing marking the end of my participation in the contest. I never got to give a try to puzzle 5. However, given that I did not have a computational tool in place, it was clear to me that I would not have been able to solve puzzle 5 on time.

Results

I got a total of 17 pts. I could have gotten 19 pts. if I had realized that I had an additional answer in puzzle 4. Thus, the highest that I could have ranked is 7th, very distant from the score of 50 pts. earned by the winning team.

Puzzle 1

2 pts: What is the appropriate title being referenced?

We needed to solve the whole puzzle because both “Litvak” and “1937” were needed references embedded in the text of the message. Anatole Litvak directed two movies in 1937: “Tovarich” and “The woman I loved”. The greeting in the message, “Comrade”, was the clue that “Tovarich” was the correct answer.

Puzzle 2

4 pts: What is the deciphered message?

I overthought this question. Initially, because of the message, I thought that the cipher was a simple substitution Caesar cipher. However, in spite that the Caesar cipher is a very simple one, the enciphering in the message was even simpler.

There are 6 numbers underlined in the text: 10, 23, 8, 24, 18 and 21. At the bottom of the message, they show you what to do with the first three. Continue the pattern with the last three. The first 3 numbers correspond to the characters “JGO”. The last 3 will correspond to “MEZ” Hence, the deciphered message is “JGOMEZ”

Puzzle 3

2 pts: What is the indicated country?

6 pts: What is the indicated city/town?

The country is easy enough to spot: Cuba. However, the city/town is very difficult to figure out. For a long time I thought that the coordinates in the message, which I had not been able to put together, were the coordinates of the city. It turns out that they were the coordinates of La Havana and Nassau, meant to clue you that the map was that of Cuba.

The city had to be deduced exclusively from the drawing. Initially, because of the dome, I thought that the drawing referred to a church. Eventually, I assembled enough chads to recognize the structure in the middle as a pedestal. From then on it was smooth sailing. A pedestal implies a statue. An image search for statues in Cuba gave me one in which I could see, on the back, a band stand. The location was the Jose Marti park in Cienfuegos. A specific search for the park showed that the dome was that of the Cienfuegos City Hall. After the contest I found out that the drawing was based on the image of the Jose Marti park from Wikipedia.Puzzle 4

12 pts:What are the name and/or initials of the collaborators?

I got 3 pts for ‘George’ and ‘Dave’. I would have gotten 2 more for ‘PK’. There were a total of 5 names. You would get 1, 3, 5, 8 or 12 if you found 1, 2, 3, 4 or 5 of the names, respectively.

Departing thoughts

The contest was a lot of fun and very challenging. I would have liked to find out about it before it started but I don’t know how much that would have really benefited me. Also, in spite that I like to work alone, it was obvious that it would have been great to have been part of a team.

During the contest I was in touch with another team, the “Herded Rappers”, made of people from JPL. I know four of its six members and think very highly of them: Mike Burl, Aaron Kiely, Matt Klimesh and Sam Dolinar. They entered the contest even later than me and thus, when they asked me to join them, I was already in 5th place and declined the offer. However, eventually they did catch up with me and finished in 13th place. In retrospective, it would have made sense to join forces but even in that case it is unlikely that we would have won.

The “Rappers”, like the winning and many of the high ranking teams, had put together a computational tool that helped them select pieces. You could ask the tool things like “Show me all the pieces with the top 20% dark and the bottom 40% white”. This looked very useful but the level of automation is very low, i.e., all the decisions about how well a chad fits with another one still require a person.

The right approach: Constraint Satisfaction

Useful as the Rapper’s tool was, I was surprised that it was so different from what I had in mind, i.e., an automated tool based on constraint satisfaction. I was not expecting such tool to solve the problem but I was expecting it to find an approximate solution that could be useful. Thus, it could give you the most likely configurations of chads that are adjacent to a particular one. In this case, each chad constrains all the others and hopefully settles on a reasonable configuration. Although, I did not finish my tool, there was a team that wrote one in exactly this venue.

Andrew Gallagher and Aaron Deever participated in the DARPA Shredder Challenge – team EK55 – and placed 17th. They published a paper, “Semi-automatic Assembly of Real Cross-cut Shredded Documents”, in 2012 ICIP (IEEE Intl. Conf. Image Processing) in which they describe their approach. In a first step, they segment the pieces out of the image and rotate them to their correct orientation. This step, completely automatic, was also done by many other teams. However, they followed it by a second step based on constraint satisfaction: they paired chads whose boundaries shared ink strokes automatically! As expected, the algorithm not always got it right but they could verify the selection and modify it. With this tool they were able to solve puzzles 1 and 2 and parts of puzzles 3 and 4. If there had been a 6th puzzle and a bit more time, I would have put my money on them to win the whole thing.

A few months after the DARPA Shredder Challenge finished, Gallagher published a second paper that extended the tool to solve puzzles in which the orientation of the piece was not known: Jigsaw Puzzles with Pieces of Unknown Orientation. The paper was presented in 2012 CVPR ( IEEE Intl. Conf. on Computer Vision and Pattern Recognition, pp. 382-389) and Gallagher kindly made the code available. His algorithm was used to solve puzzles with squared pieces, removing all hints given by the piece’s shape.

A critique to Crowd Sourcing

In my mind, there is no doubt that constraint satisfaction, ala Gallagher and Deever’s tool, is the only effective way to solve this type of puzzles on a consistent basis. My own approach of doing them by hand is cost-effective only as an alternative to writing the tool when there is a time constraint. However, if the tool already exists, it will always win against a manual approach and those who only automate chad extraction and orientation.

Crowdsourcing, much talked about during the contest as the ‘obvious’ approach to tackle this problem, has many problems: besides being subject to sabotage (as it became evident during the contest), it requires making sensible material public, or at least, widening the number of people that under other circumstances would have had access to it.

In addition, crowdsourcing only works if the members of the crowd are already good at solving the given problem, i.e., we just cannot tell 1000 people selected at random to work on the problem; we need 1000 people that are already good at problem solving and this is far from the average Joe. Just look at the distribution of people that were both attracted to the challenge and did well: university professors and researchers with a solid background in computer science and/or mathematics and, more often than not, familiar with computer vision and/or pattern recognition. This is not the archetype of the typical military personnel that might be available to ‘crowdsource’ the problem to.

Finally, by its very nature, the demographics that are good at solving problems of this type is a terrible crowd because they will lose interest, they will want to move on to new problems. Consider how many of the members of the top 100 teams would be willing to work in a crowd sourcing problem one time: many. However, how many of those would be willing to work on it two times, or three, or 100 times? Very few to none.

The Darpa Shredder Challenge

was an open contest that run from Oct. 27, 2011 to Dec 2, 2011. The DARPA website of the contest, “http://archive.darpa.mil/shredderchallenge/”, is no longer online.